A professor of literature chronicles her hearing loss journey through her personal and professional spheres.

By Roberta Rubenstein Larson

The author during a visit to the Canadian Rockies.

When did I first recognize that I might be losing my hearing? Perhaps it was when I became quite ill while in college and was hospitalized for a week with a severe case of mononucleosis complicated by strep throat. Among the treatments I received was streptomycin, an antibiotic that was successful for treating strep throat, tuberculosis, and other infections, but was later discovered to be ototoxic, causing irreversible damage to cochlear hair cells and the onset of permanent hearing loss in some patients. However, I knew nothing about these potential side effects at the time.

Several years later, signs of my hearing loss began to be visible—or, I should say, audible. During my early 20s, while I was visiting vacationing family members at a lake in Maine, someone commented on the haunting cries of loons calling to one another. What loons? I could not hear these unique water birds’ melancholy songs, which range over many frequencies, including high pitched notes—the same upper frequencies at which many human hearing losses are first discovered during audiological exams.

Not too long after I became aware of my hearing loss, I awoke in fright one morning. Every sound was significantly distorted, as if it had been drastically remixed inside a noisy tunnel. I immediately contacted an otolaryngologist, who diagnosed the condition as sudden sensorineural hearing loss (SSHL).

Though the cause of this audiological crisis is—like that of most hearing losses—unknown, the doctor mentioned that it may be triggered by common conditions like earwax or viruses, or more serious conditions such as head injury, side effects of certain medications, or autoimmune reactions.

It may also be triggered as a reaction to sulfites used in the production of wine, which I speculated was my case, from a party the previous evening. Though treatment with steroid medication restored some but not all of my lost hearing, for decades afterward I avoided red wine entirely, regarding it as the cause of an allergic reaction I hoped never to experience again.

A Progressive Loss

During the early years of my career as a professor of literature at American University in Washington, D.C., my hearing loss inexplicably progressed. I struggled to maintain auditory competence in the classroom, boosting the hearing in my better ear through a series of increasingly powerful hearing aids. My poorer ear could not be aided because the interval between barely audible and painfully loud sounds was too small.

I dreaded the annual audiological exam. Sitting in the soundproof cubicle, I concentrated intensely on raw tones delivered at different frequencies and volumes or strained to repeat words and phrases spoken by a recorded voice. Each audiogram confirmed the downward slope of the graph as my hearing loss progressed from moderate to severe to profound.

As a professor in midcareer whose teaching directly depended on the spontaneity of class discussions rather than prepared lectures, I began to suffer from depression. I faced the prospect of early retirement from a profession I loved. Having received positive student feedback and several teaching awards, I knew I was an effective teacher—except for the challenges to comprehension posed by students who mumbled, spoke softly, or commented with a hand over their mouth.

The loss of hearing is a profoundly emotional occurrence. It is disheartening to miss key portions of dialogue in ordinary conversations, telephone chats, film voiceovers, theater performances, and virtually every other aural context. It is demeaning to be perceived as cognitively impaired when one simply asks for directions and misses crucial details in the answer.

During the peak years of the Covid pandemic, face masks made (and still make) life much more difficult for people with hearing loss. While protecting the wearer, a mask muffles speech and removes the possibility of reading lips. Missed words particularly affect the aural rhythms of jokes, which typically depend on wordplay and a rapid reversal of listeners’ expectations. Just when one anticipates the emotional payoff that elicits laughter, the volume of the speaker’s voice drops with the punchline. We miss the joke.

In response to my diminishing hearing aptitude, I learned over time to employ two coping mechanisms. First, I spontaneously learned to read lips; second, in concentrating to comprehend speech as fully as I could, I became a very attentive listener—a valuable skill for a professor. To improve my lipreading proficiency, I took several classes offered by a woman who, when she was only 21 years old, had suddenly lost all hearing due to a severe viral infection and had become a skilled speech reader and teacher.

Ready for Implants

At this point, during my 50s, my audiologist informed me that I was profoundly deaf in both ears and recommended that I consider getting a cochlear implant (CI). With some trepidation, I proceeded to do so, receiving the first of two implants in 2000. This remarkable surgically implanted device functions by transforming sounds received by a speech processor into digital information, bypassing the damaged hair cells in the cochlea to stimulate the auditory nerve directly.

My doctor was John Niparko, M.D., the distinguished otolaryngologist and ear surgeon at Johns Hopkins University Hospital, who died at age 61 in 2016. He concurred with my preference to have the implant in my poorer ear. Since I simply could not imagine actually hearing via digitally processed sounds, I reasoned that I had less to lose if I received the implant in the unremediated ear that had not apprehended meaningful sounds for years. Though otolaryngologists have disagreed on whether implantation in the better or poorer ear in patients is the more successful strategy, clinical research has demonstrated that the difference in outcomes is inconsequential.

Fifteen years later, I received a second implant, in my once “better” ear. The speech processor, whose components were produced by Advanced Bionics (also the maker of my first processor), featured many enhancements, including rechargeable batteries that last 18 hours rather than just four hours on a single charge. But, disappointingly, it was less successful than the first one.

Because of technical advancements during the 15-year interval between my two implants, the two processors were essentially incompatible. For the first six months after the newer device was implanted, I experienced the audiological equivalent of double vision as my brain tried to reconcile two dissimilar sound streams. Although the separate sound streams eventually merged into one, my brain continues to rely primarily on the older implant.

Outpatient CI surgery is followed by a six-week recovery period while the surgical site heals. Before my first implant was activated, I wrote in the journal I kept of this unparalleled experience:

“I’m looking forward to this adventure, as my brain learns to make sense of sounds I haven’t heard for years. I hope that in due course I’ll be able to hear without always having to see the speaker, use a phone, hear in the dark, and—maybe—enjoy classical music again. I also hope that, when I don’t have to struggle so hard to hear, I’ll relax more in my life.”

Then: The CI audiologist “turned on” the device and… I heard sounds! The problem was, I could make no sense of them. (I had been told to expect this first stage.) When my husband drove us home on activation day, I heard something coming from the car radio but had no idea whether it was speech or music.

Sound recognition and speech comprehension do not arrive instantaneously, like turning on the tap for water. Rather, new CI recipients are assisted by specially trained CI audiologists, who fine-tune (“map”) digital settings in the external processor to align with the recipient’s sound comprehension profile, and by speech therapists, who help them recognize letters and words amid a garbled stream of sound. Over time, CI recipients retrain their brains to make sense of sounds that were initially incomprehensible.

There were so many new sounds, especially environmental ones, to identify. Before my implant, I hadn’t realized that for many years I had not heard birds singing in my garden. Once I began to hear them, I recalled the unheard loon calls years earlier that were the first evidence of my hearing loss and cried with joy at these newly audible dimensions of my environment.

Initially, hearing many sounds that I did not recognize, I would ask my husband or children, “What was that?” Random sounds, such as the blip a decoding device makes as it registers the barcode on groceries, were entirely new to me. I was surprised to discover that the wood floors in my house squeaked and that the refrigerator, dishwasher, and other appliances hummed. I began to hum myself.

As I became more proficient in interpreting sounds and speech, I realized that the learning process undertaken by new CI recipients is analogous to the way infants learn language, beginning when they first recognize their own name and advancing as repeated words emerge from a bewildering stream of noise. This process of comprehension is the essential developmental stage that commences long before babies speak their own first words.

It was thrilling for me to be immersed in this process the second time around, this time as an adult who could observe while experiencing what was happening not in my ear but in my brain. When I attended my first child’s college graduation a year after my implant, I was elated to understand almost every word spoken, not only by my daughter and her friends and professors but also by the commencement speaker that year, Toni Morrison.

Hearing Gains

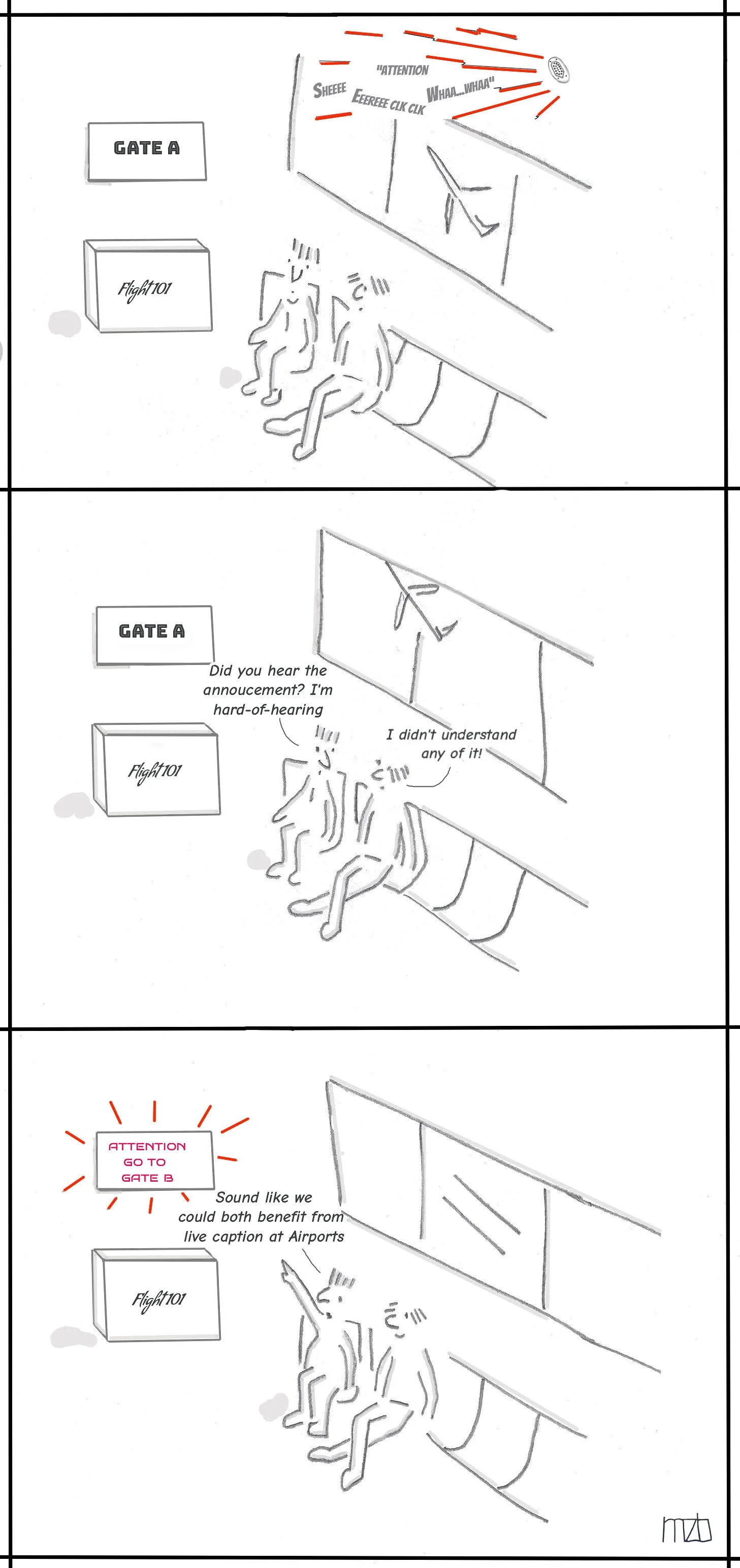

Placing my experience of hearing losses in context, I—like so many other people with hearing loss—am also the recipient of numerous hearing gains. We are fortunate beneficiaries of a technological revolution, including not only cochlear implants but also increasingly sophisticated hearing aids as well as assistive devices, apps in theaters and cinemas, and mobile phones. Increasingly, we can access captions on computers, television, and phones as well as in many live theaters and cinemas.

Most of these accommodations are the result of the Americans with Disabilities Act, the 1990 civil rights law that “[prohibits] discrimination against individuals with disabilities in all areas of public life….” For the most part, people with hearing loss have gained entrance to a kindlier environment that has liberated us from the woefully silent, lonely world that those before us have experienced for most of history.

I feel exceptionally fortunate. My hearing journey has ultimately been a positive one, both personally and professionally. Although I once dreaded involuntary early retirement, necessitated by my profound hearing loss, instead I continued to teach for the next 25 years. After 51 deeply gratifying years in the classroom, just three years ago, in May 2020, I retired from American University as Professor Emerita of Literature.

Roberta Rubenstein Larson lives in Chevy Chase, Maryland. This appeared in the Winter 2023 issue of Hearing Health magazine.

The new public service announcement “Let’s Listen Smart” recognizes that life is loud—and it’s also fun. And the last thing we want to do is stop having fun! We just need to listen responsibly.