By Subong Kim, Ph.D.

As a hearing scientist, one of the questions that keeps me up at night is this: Why do two people with nearly identical hearing test results have such wildly different experiences trying to understand speech in noisy places?

Two people come in with the same audiogram—meaning they have the same hearing sensitivity when tested with pure tones. Yet one can follow conversations at a loud party, while the other feels completely lost and overwhelmed.

It’s the same story with noise reduction (NR) in hearing aids (which we also examined and published on recently). NR is a common feature in modern hearing aids, but some people love it, while others hate how it changes the sound of speech.

These huge differences make me believe there must be individual characteristics that shape how each person handles noise and benefits from NR. But finding a way to measure those differences has been surprisingly difficult.

In our recent research, my students and I set out to evaluate such individual characteristics using two approaches. First, we looked at brain responses, aiming to see how well someone’s brain distinguishes speech from noise.

Second, we wanted to capture people’s subjective experience in a more systematic way than just handing out a simple questionnaire. We hoped this would reveal insights about personal noise tolerance that might not show up in standard hearing tests.

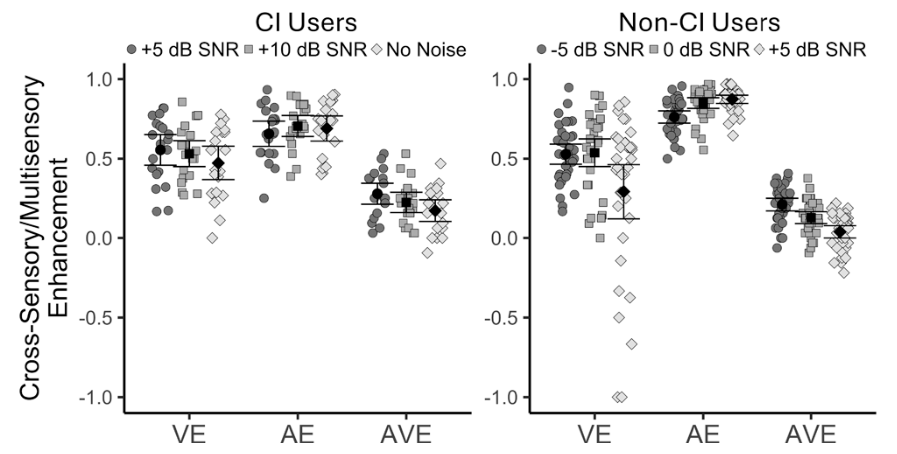

Charts showing how well two different groups of people understood speech: (A) in background noise without help, (B) improvement with noise reduction setting 1, and (C) improvement with noise reduction setting 2. Credit: Kim et al./Audiology Research

For the brain measures, we focused on something called the neural signal-to-noise ratio (neural SNR). This essentially measures how strongly the brain responds to speech sounds compared to background noise. A higher neural SNR means a person’s brain is good at filtering out noise and locking onto speech, which is critical for understanding conversations in challenging environments.

On the subjective side, we developed a task that systematically examined how much people are bothered by three different aspects of noise: how annoying the noise sounds, how much it interferes with speech, and how much effort it takes to listen. We hoped that combining these factors would help us identify individual noise-tolerance profiles.

Our findings were eye-opening. The neural SNR was excellent—it clearly predicted how well people understood speech in noise and how much benefit they got from NR in hearing aids. People with stronger neural SNRs generally performed better in noise and showed less need for external NR. In contrast, those with weaker neural SNRs seemed to benefit more from NR algorithms.

But the subjective noise-tolerance profiles didn’t turn out to be as sensitive as we’d hoped. While we could cluster people into groups based on their responses, these groupings didn’t strongly predict who would perform better in noise or who would benefit more from NR.

Still, I believe it’s crucial to integrate both physiological measures and subjective experiences. After all, the first thing we hear from our patients is how they feel about their hearing.

Our study, published in the journal Audiology Research in July 2025, is a step toward combining both worlds to truly personalize hearing care. We’ll keep working to unlock the secrets behind why people with the same hearing sensitivity hear so differently in the real world.

A 2022–2023 Emerging Research Grants scientist, Subong Kim, Ph.D., is an assistant professor of audiology in the department of communication sciences and disorders at Montclair State University in New Jersey.

Because noise-canceling earbuds are so comfortable and block everything out, people wear them for three, four, five hours straight without realizing the cumulative effect on their ears.