By Matthew Masapollo, Ph.D.

When we talk, we have the impression that we don't have to do much. We have an idea, our mouth starts to move, and the sound comes out, and we don't have a sense of the steps involved in the process. Yet anyone who has ever attempted to produce speech in a foreign language can readily attest that the ability to produce the sound sequences in a fluent, natural, and coordinated way is a far from trivial skill. Moreover, the challenges that individuals born with profound sensorineural hearing loss encounter in learning to speak also hint at the complexity involved.

During typical conversational interactions, humans use over 100 different muscles in the vocal tract to produce up to six to nine syllables per second, which is one of the fastest types of motor behavior. More complicated still, the various articulators of the vocal tract—the lips, tongue, jaw, and glottis—do not move in an isolated, independent manner. Rather, their movement patterns are lawfully orchestrated with one another in space and time. Unfortunately, though, no single instrument is capable of concurrently measuring the complex motion patterns of all the articulators involved in speech production.

By combining electromagnetic articulography and electroglottography, researchers can simultaneously record lip, tongue, jaw, and glottal motion during speech production without electromagnetic interference. Credit: University of Florida image by Hillary Carter and Shena Hays

Recently, my team and I demonstrated that two different instruments with complementary strengths—electromagnetic articulography (EMA) and electroglottography (EGG)—can be combined to concurrently measure laryngeal and supralaryngeal speech movements. EMA uses diffuse magnetic fields to track the positions of sensors fixated on the lips, tongue, and jaw during speaking, and EGG measures electrical impedance across the larnyx during the production of voice.

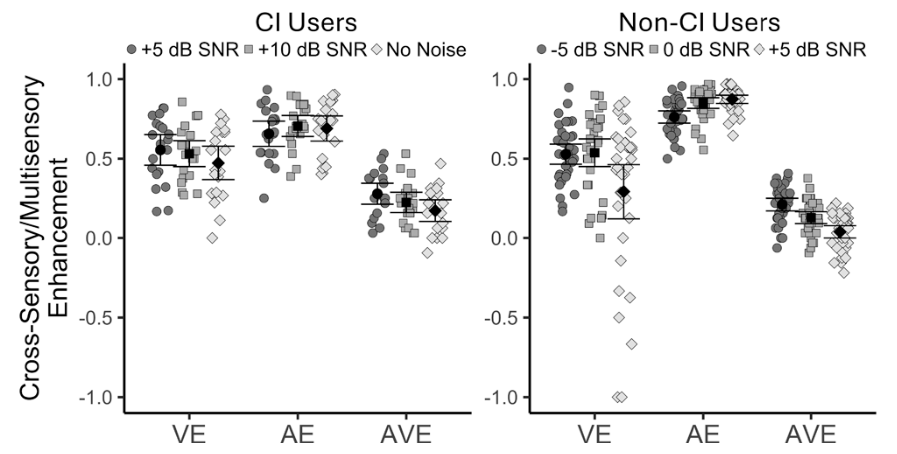

We plan to use these instruments to better characterize how congenitally deaf talkers who received cochlear implants (CIs) and their typical-hearing peers control and coordinate laryngeal and supralaryngeal movements. As published in JASA (Journal of the Acoustical Society of America) Express Letters in September 2022, this approach is novel because most clinical interventions for children with CIs focus on listening to speech. In our well-intentioned efforts to promote listening experiences we may be overlooking the importance of practice in producing speech.

We hypothesize that providing CI wearers with orofacial somatosensory inputs associated with speech production may “prime” motor and sensory brain areas involved in the sensorimotor control of speech, thereby facilitating perception of the degraded acoustic signal experienced through their processors.

A 2022–2023 ERG scientist, Matthew Masapollo, Ph.D., is an assistant professor in the department of speech, language, and hearing sciences, and the director of the University of Florida Speech Communication Lab.

Because noise-canceling earbuds are so comfortable and block everything out, people wear them for three, four, five hours straight without realizing the cumulative effect on their ears.