By Kenneth Vaden, Ph.D.

Speech recognition in noise is typically poorer for older adults than younger adults, which can reflect a combination of sensory and cognitive-perceptual declines. Considerable variability, with some older adults performing nearly as well as younger adults, may be explained by differences in listening effort or cognitive resources to facilitate speech recognition. Neuroimaging evidence suggests that a set of frontal cortex regions are engaged with increasing listening difficulty, but it is unclear how this might facilitate speech recognition. Speech recognition in noise task performance has been linked to activity in cingulo-opercular regions of the frontal cortex, in what appears to be a performance monitoring role. More extensive frontal cortex activity during challenging listening tasks has been shown for older compared to younger adults, which could reflect task difficulty.

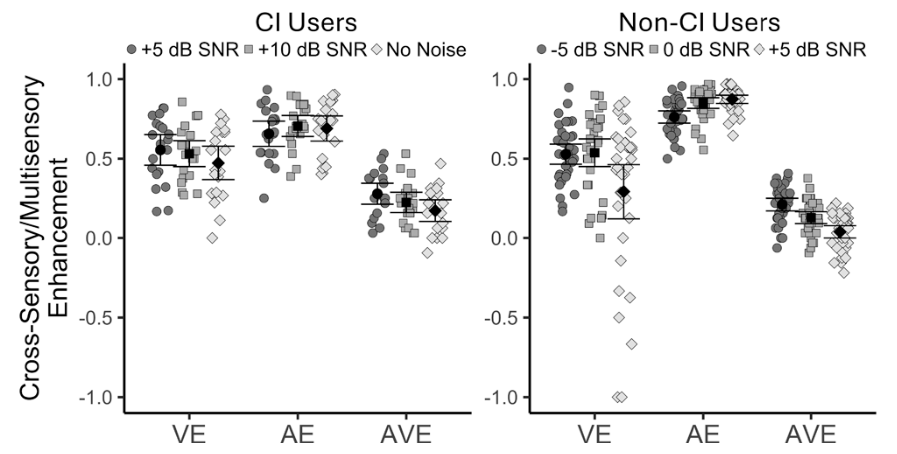

This figure from the NeuroImage paper shows word recognition task significantly increased activity throughout superior temporal regions and cingulo-opercular regions of cortex. Credit: Vaden et al./NeuroImage

Our study, published in the journal NeuroImage in June 2022, examined neural mechanisms that may support speech understanding in noise and other difficult recognition tasks. In relatively quiet acoustic conditions, speech recognition often occurs very rapidly, mapping sensory input (sound) onto stored mental representations. However, when speech information is distorted or limited by background noise, recognition often becomes slow and effortful.

Recognition based on incomplete sensory information (e.g., noisy conditions) is theorized to involve perceptual decision-making processes that collect evidence until a recognition criterion is met. A perceptual decision-making theoretical framework has been used to account for the speed-accuracy tradeoff: fast responses associated with low accuracy, and slow responses associated with high accuracy.

We used an fMRI (functional magnetic resonance imaging) experiment with middle-aged and older participants to examine perceptual decision-making activity, particularly in cingulo-opercular regions across the frontal cortex. Participants were instructed to listen to a word presented in a multi-talker babble environment (e.g., cafeteria noise), and repeat the word aloud. A sparse design with long, quiet gaps between scans (6.5 seconds) allowed speech to be presented without scanner acquisition noise and minimized speech-related head movement during image collection.

We found that word recognition was significantly less accurate with increasing age and in the poorer signal-to-noise ratio condition. A computational model fitted to task response latencies showed that evidence was collected more slowly and decision criteria were lower in the poorer of two signal-to-noise ratio conditions.

Our fMRI results replicated an association between higher, pre-stimulus BOLD contrast (i.e., prior to each presentation) measured in a cingulo-opercular network in the frontal cortex and subsequent correct word recognition in noise. Consistent with neuroimaging studies that linked cingulo-opercular regions in the frontal cortex with decision criteria, our results also suggested that decision criteria were higher and more cautious on trials with elevated activity. The takeaway is that perceptual decision-making accounted for changes in recognition speed and was linked to frontal cortex activation that is commonly observed in difficult listening tasks.

In summary, our study indicates that 1) perceptual decision-making is engaged for difficult word recognition conditions, and 2) frontal cortex activity may adjust how much information is collected to benefit word recognition task performance.

These findings potentially connect neuroscientific research on perceptual decision-making, often focused on visual object recognition, with our research on age-related listening difficulties and (nonauditory) frontal cortex function in these tasks. Similar neural mechanisms may help guide how a person drives a car in heavy fog or listens to a friend speaking in a crowded restaurant. In either of these cases, a related set of brain networks may collect evidence until recognition occurs. Additional studies will characterize how evidence collection and decision criteria relate to hearing loss and older age.

A 2015 Emerging Research Grants scientist generously funded by Royal Arch Research Assistance, Kenneth Vaden, Ph.D., is a research assistant professor in the department of otolaryngology–head and neck surgery at the Medical University of South Carolina, where HHF board member Judy R. Dubno, Ph.D., one of the paper’s coauthors, is a professor.

It bears repeating: What improves access for a group with a specific disability invariably also helps the greater population.