By Matthew Masapollo, Ph.D.

During skilled speech production, sets of independently moving articulators, such as the tongue and jaw or lips and jaw, work cooperatively to achieve target constrictions at various locations along the vocal tract.

For example, during the production of the "t" and "d" sounds, the tip of the tongue must raise and form a tight constriction against the alveolar ridge of the palate (the bony area behind the upper front teeth). To achieve this target constriction, the jaw and tongue work in concert to raise the tongue-tip up to the palate.

People who are hard of hearing show deficits in their ability to control these types of coordinated movements in a precise and consistent manner, suggesting that the choreography in the vocal tract during speech relies on auditory input.

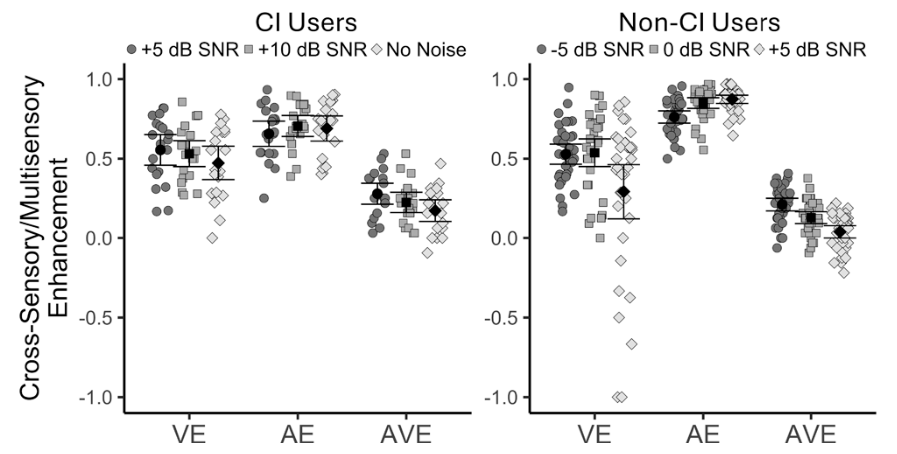

In our study published in the Journal of the Acoustical Society of America in September 2024, we used a technique called electromagnetic articulography (EMA), to record and track the movements of the jaw and tongue-tip during the production of the "t" and "d" sounds when the auditory feedback about one's own speech was and was not audible (with and without auditory masking).

Left: This diagram of the vocal tract shows where sensors were placed. Orange dots mark sensors that track mouth and tongue movement, while blue dots show sensors that stay fixed on the head. LM = left mastoid (side of the skull behind the ear); RM = right mastoid; UI = upper incisor (front tooth); J = jaw. Right: Setup used to mask sound during speech, with sensors tracking mouth movements through electromagnetic articulography (EMA). Credit: Masapollo et al./JASA

Participants produced a variety of utterances containing the target "t" or "d" sounds at different speaking rates and during the two listening conditions. During the masking condition, participants heard multitalker babble through inserted earphones at 90 decibels sound pressure level (dB SPL), which rendered their own speech inaudible. The masker was presented on a very restricted timescale—just a few seconds while participants produced a given utterance.

What we found is that during masking the temporal coordination between the tongue and jaw was less precise and more variable. This is the first study to directly examine the role of auditory input in the real-time coordination of speech movements.

Our results are consistent with the theory that people rely on auditory information to coordinate the motor control of their vocal tract in service to speech production and opens up many new, critically important questions about people with congenital auditory deficits.

The long-term degraded auditory input that occurs with congenital hearing loss is undoubtedly many orders of magnitude greater than the brief masking manipulation carried out in this laboratory situation. We theorize that, compared with people with typical hearing, people who use cochlear implants will rely more on how their mouth and tongue feels, relative to auditory information, to control speech movements. This is because the acoustic signal available through cochlear implants can be degraded.

We are currently using EMA to test this hypothesis, where we are obtaining EMA measures when speakers talk with their CI speech processors turned on versus off.

A 2022–2023 Emerging Research Grants scientist, Matthew Masapollo, Ph.D., is now a research associate at the motor neuroscience laboratory in the department of psychology at McGill University.

I made one hat to solve problems, never imagining how many other adults and children would relate. It’s an honor to be able to give something back to the cochlear implant community that understands this journey so well.