By Carol Stoll

Ménière’s disease is characterized by fluctuating hearing loss, vertigo, tinnitus, and ear fullness, but the causes of these symptoms are not well understood. Past research has suggested that a damaged blood labyrinthine barrier (BLB) in the inner ear may be involved in the pathophysiology of inner ear disorders. Hearing Health Foundation (HHF)’s 2016 Emerging Research Grants (ERG) recipient Gail Ishiyama, M.D., was the first to test this proposition by using electron microscopy to analyze the BLB in both typical and Ménière’s disease patients. Ishiyama’s research was fully funded by HHF and was recently published in Nature publishing group, Scientific Reports.

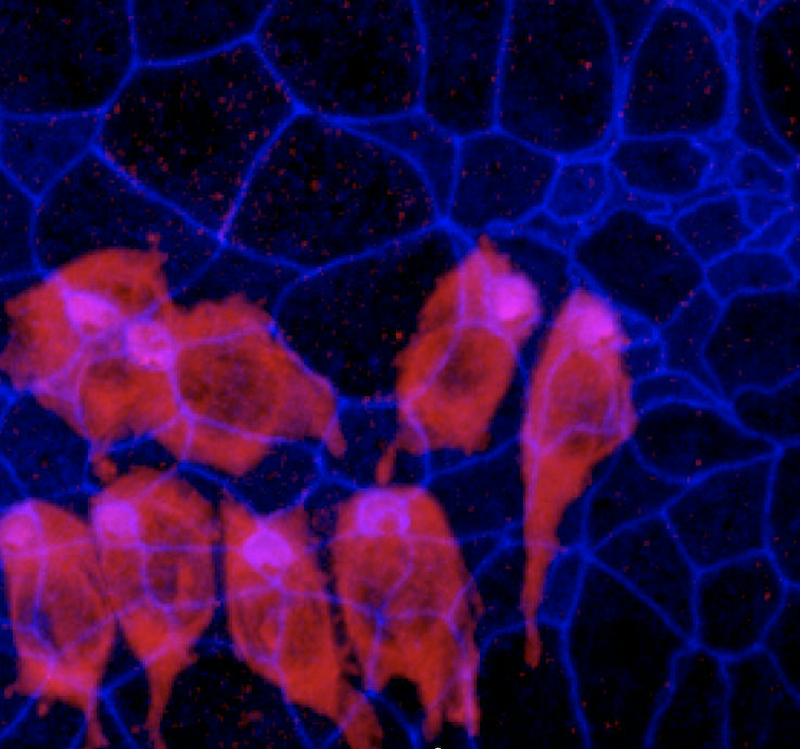

The BLB in a Meniere’s disease capillary. a) Capillary located in the stroma of the macula utricle from a Meniere’s subject (55-year-old-male). The lumen (lu) of the capillary is narrow, vascular endothelial cells (vec) are swollen and the cytoplasm is vacuolated (pink asterisks). b. Diagram showing the alterations in the swollen vec, microvacuoles are also abundant (v). Abbreviations, rbc: red blood cells, tj: tight junctions, m: mitochondria, n: cell nucleus, pp: pericyte process; pvbm: perivascular basement membrane. Bar is 2 microns.

The BLB is composed of a network of vascular endothelial cells (VECs) that line all capillaries in the inner ear organs to separate the vasculature (blood vessels) from the inner ear fluids. A critical function of the BLB is to maintain proper composition and levels of inner ear fluid via selective permeability. However, the inner ear fluid space in patients with Ménière’s has been shown to be ballooned out due to excess fluid. Additionally, the group had identified permeability changes in magnetic resonance imaging studies of Meniere’s patients, which may be an indication of BLB malfunction.

Ishiyama’s research team used transmission electron microscopy (TEM) to investigate the fine cellular structure of the BLB in the utricle, a balance-regulating organ of the inner ear. Two utricles were taken by autopsy from individuals with no vestibular or auditory disease. Five utricles were surgically extracted from patients with severe stage IV Ménière’s disease with profound hearing loss and intractable recurrent vertigo spells, who were undergoing surgery as curative treatment.

Microscopic examination revealed significant structural differences of the BLB within the utricle between individuals with and without Ménière’s disease. In the normal utricle samples, the VECs of the BLB contained numerous mitochondria and very few fluid-containing organelles called vesicles and vacuoles. The cells were connected by tight junctions to form a smooth, continuous lining, and were surrounded by a uniform membrane.

However, samples with confirmed Ménière’s disease showed varying degrees of structural changes within the VECs; while the VECs remained connected by tight junctions, an increased number of vesicles and vacuoles was found, which may cause swelling and degeneration of other organelles. In the most severe case, there was complete VEC necrosis, or cell death, and a severe thickening of the basal membrane surrounding the VECs.

The documentation of the cellular changes in the utricle of Ménière’s patients was the first of its kind and has important implications for future treatments. Ishiyama’s study concluded that the alteration and degeneration of the BLB likely contributes to fluid changes in the inner ear organs that regulate hearing and balance, thus causing the Ménière’s symptoms. Further scientific understanding of the specific cellular and molecular components affected by Ménière’s can lead to the development of new drug therapies that target the BLB to decrease vascular damage in the inner ear.

Gail Ishiyama, M.D., is a 2016 Emerging Research Grants recipient. Her grant was generously funded by The Estate of Howard F. Schum.