By Yishane Lee

Standard hearing tests may not account for the difficulty some individuals have understanding speech, especially in noisy environments, even though the sounds are loud enough to hear. To better identify and treat these central auditory processing disorders that appear despite normal ear function, 2016 Emerging Research Grants (ERG) scientist Richard A. Felix II, Ph.D., and colleagues have been investigating how the brain processes complex sounds such as speech.

In the past, speech processing research has focused on higher-level brain regions like the auditory cortex, but there is strong evidence showing that lower-level subcortical areas may play a significant role in hearing disorders. In their paper “Subcortical Pathways: Toward a Better Understanding of Auditory Disorders,” published online in the journal Hearing Research on Jan. 31, 2018, Felix and team review studies that examine the auditory brainstem and midbrain and their functional effect on hearing ability.

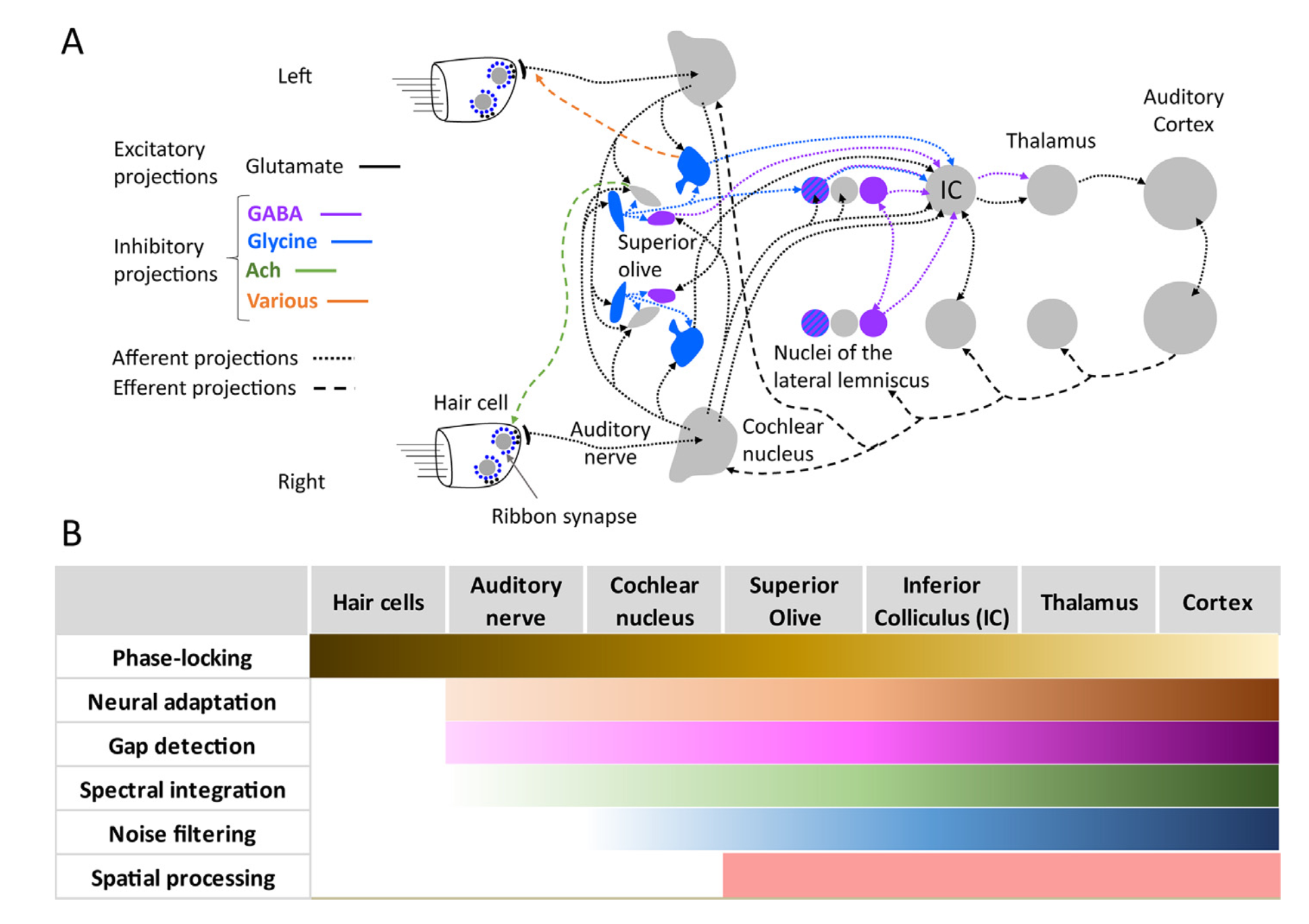

The illustration shows the major inhibitory and excitatory, ascending and descending, neurotransmitter connections of subcortical pathways. The table lists features of auditory processing, with the contribution (or potential impairment) of each structure depicted with increasing strength represented by darker colors.

Speech contains various acoustic hallmarks such as pitch, timbre, and gaps between starts and stops of sound energy that the brain uses to create distinct auditory “objects”—for example, listening to one voice among multiple talkers in a noisy room. Our brains extract these acoustic clues by decoding spectral, temporal, and spatial information in order to identify and understand complex sounds.

Studies of mammalian species show that these sound features are extracted at the level of the midbrain by nerve cells in a region called the inferior colliculus, and through the integration of multiple ascending (“bottom-up”) pathways: from inner ear hair cells to the auditory nerve; to the brainstem’s cochlear nucleus and superior olivary complex; to the midbrain’s inferior colliculus; to the forebrain’s thalamus; and to the auditory cortex.

For instance, several key functions of auditory processing previously attributed to the cortex, such as the selectivity of neurons to particular vocalizations, are now demonstrated in subcortical pathways. The cortex builds upon these coding strategies to produce typical hearing and communication abilities in most individuals.

Felix and team go on to detail auditory disorders that may result in large part from subcortical processing failures. Since neurotransmitters are important in the brain, including subcortical regions, an imbalance in these chemicals’ excitatory or inhibitory actions (as typically happens with age) can affect the ability to hear complex sounds.

Disruptions of bottom-up processing may lead to hearing difficulties that are not revealed using standard hearing tests. This includes auditory synaptopathy and auditory neuropathy (terms sometimes used interchangeably), also called “hidden hearing loss.” One concern with hidden hearing loss is that subcortical processing may be affected by noise levels previously thought to be relatively safe (as low as 80 decibels).

Likewise, central auditory processing disorders may be a result of abnormal top-down processing, leading to problems with selective attention and other hearing-related tasks.

The authors conclude, “Subcortical pathways represent early-stage processing on which sound perception is built; therefore problems with understanding complex sounds such as speech often have neural correlates of dysfunction in the auditory brainstem, midbrain, and thalamus.” The hope is that further study of animal models as well as human subjects will lead to tools to aid in the diagnosis and treatment of hearing disorders caused by problems with subcortical sound processing.

Richard A. Felix II, Ph.D., is a postdoctoral researcher in the Hearing and Communications Lab at Washington State University Vancouver. A 2016 Emerging Research Grants recipient, he was generously funded by the General Grand Chapter Royal Masons International. Read more about Felix in “Meet the Researcher,” in the Summer 2017 issue of Hearing Health.